Synchronising an augmented-reality experience to a broadcast programme on an HbbTV 2 device

Version 2 of the HbbTV standard, introduces several new features. Probably the most interesting set of features target at combining mobile devices such as tablets or smartphones with HbbTV devices. These so-called companion-screen features allow creating distributed media experiences, in the sense that the TV experience can be extended from the TV to a secondary device, and provide key enablers for the convergence of TV services and media services for mobile connected devices.

Getting in sync with the TV content

In a previous blog post we introduced the DIAL protocol for automatic discovery of HbbTV devices and launch of HbbTV applications from a companion device. In this post, we provide a demonstration of the DVB Companion Screens and Streams (DVB-CSS) set of protocols, which, in a nutshell, enable an HbbTV device to:

- Share content information: companion-screen apps know what content is being presented on the TV screen

- Share timing information: companion-screen apps know the current position on the content timeline on the TV

This information can be used by companion applications to present content that is contextually related and timely synchronised to the TV content.

Synchronised AR companion

First demonstrations showed applications of the DVB-CSS protocols for Tablet and Smartphone devices, for example by BBC R&D at IBC 2015, IRT at IBC 2016 and the EU project ImmersiaTV IBC 2016. However, DVB-CSS is not limited to these platforms. At IRT we are currently exploring applications of different 360° video, virtual reality (VR) and augmented reality (AR) platforms for viewers and producers of broadcast media. AR platforms include Microsoft’s HoloLens, which allows projecting three-dimensional, virtual objects (holograms) into the user’s field of vision.

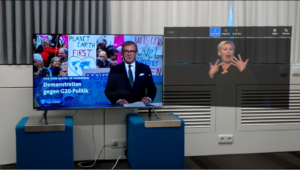

We built a virtual display application for HoloLens which plays back video in sync with the running TV programme. On this basis, we created two experiences. In the first one the HoloLens displays a video of a sign-language interpreter next to a (real) TV set which is showing a version of the ARD news magazine “Tagesschau”. The second experience is an experimental short movie which uses the additional display as a stylistic element for story telling. The below video provides an impression of how these two experiences look like.

Experience it in real life

We show both demos at upcoming trade fairs. Come and visit us at our booths at IFA and IBC 2017.

Acknowledgements

Many thanks to Nick Godfrey. Nick is a student at University of Cincinnati. He worked with IRT during a three weeks internship and produced the short movie “Mondays”, of which an excerpt is shown in the video . Thanks to the colleagues from ARD-aktuell and Phoenix for the provisioning of the “Tagesschau” episode and the accompanying video material of the sign language interpreter.

The research leading to these results has received funding from the European Union’s H2020-ICT-2015 programme under grant agreement n◦ 687655 (2-IMMERSE).