Social media content is becoming an ever more integral part of broadcasting. By incorporating social media services, it is not only possible to include user-generated content, but also to provide a more immersive and engaging experience for the viewers by using social media platforms as interactive feedback channels. Including this content directly into broadcasts, however, often still involves a significant amount of manual work. This article describes how this extra work can be reduced by simplifying the processing chain, from content selection to play-out.

Challenges for Embedding Social Media

Most solutions for embedding social media – Tweets, Facebook or Instagram posts – available in the broadcast domain today so far require at least three different people in the workflow: an editor browsing and selecting the social media content, an graphics operator who integrates the social media content into the broadcast graphic, and, eventually, another operator putting the final mix of broadcast graphic and video signal live on air. In addition, one usually also needs a moderator in the studio to interact with the selected social media content. However, for many productions this is just not feasible. And especially smaller broadcasters do not have the human resources available to assign that many people to such a processing chain for bringing social media content on air.

Architecture and Platform

In order to find a solution to this problem together with other experienced partners, we participated in the European-funded collaborative research and innovation project VisualMedia. The consortium included a total of 12 partners from media organisations, tech companies and academia with the goal to create a solution more suitable for everyday use.

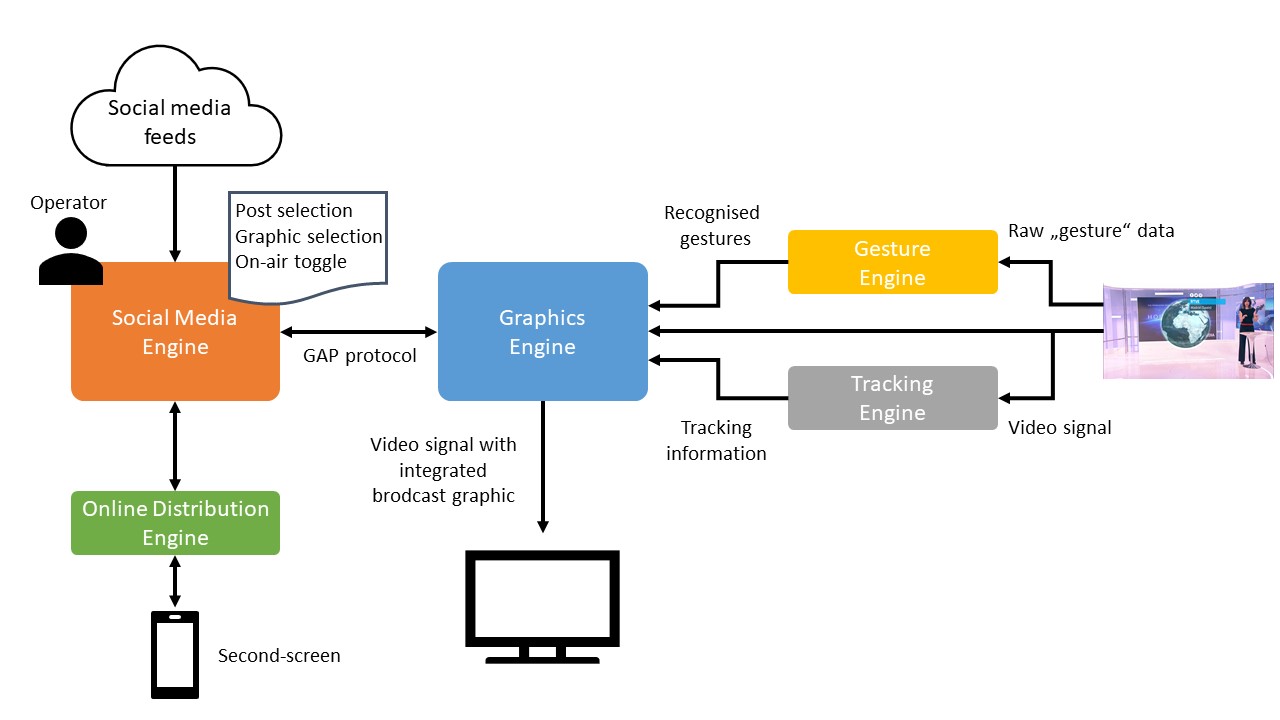

The developed modular architecture consists of different components, so called engines, with each fulfilling a specific function in the workflow. The chain starts with the Social Media Engine (SME) that allows the operator/editor to acquire social media posts from different sources (Twitter, Facebook or Instagram) in the form of feeds. It enables the operator to select posts according to chosen search criteria which are then combined into collections. These collections can contain posts from different sources. In a second step, these collections are fed into the Graphics Engine (GE) that is embedding the posts contained within the collection into the broadcast graphic and creates the overlay for the broadcast (video) signal. A tight coupling between SME and GE makes it possible for a single operator to cover multiple steps within the same user interface: selection of the content, graphical enrichment according to templates, and finally putting the overlay on-air. In addition to a fixed overlay, e.g. lower third, it is also possible to place elements freely in the room e.g. besides the moderator. This is achieved with a Tracking Engine (TE) that is working both in virtual and traditional studios. The components and the resulting architecture in the tight coupling configuration based on the Graphics-Automation-Protocol (GAP) interface are shown in the illustration below.

Architecture of the VisualMedia platform

In addition to the tight coupling described above, in which one operator does everything, also a loose coupling between SME and GE is possible in which different operators perform different roles: a SME operator selects the social media posts and a GE operator takes care of the graphical presentation. The open architecture of the loose coupling also allows for the integration of legacy or third-party components by providing well defined interfaces.

Moreover, especially for smaller broadcaster a further, somewhat radical step from a traditional perspective might be sensible: no operator at all, everything is controlled directly by the moderator in front of the camera. This was achieved by integrating a deep-learning based Gesture Engine that allows to initiate specific actions by hand gestures e.g. selecting the next Tweet in a pre-defined collection.

Second screen applications allow for a better immersion of the audience into the broadcast content. IRT therefore created a scalable, cloud-based Online Distribution Engine (ODE) within the project. It enables the provisioning of additional social media content on tablets and smartphones while enabling the inclusion of features like interactive polls with the option to display the results of these polls in the actual broadcast graphic. The ODE also facilitates the simple embedding of social media posts into HbbTV applications.

Social Media Engine

The Social Media Engine (SME) provides the interface between the different social media platforms and VisualMedia platform. It is responsible for harvesting social media content in the first step of the production workflow. Harvesting describes the fetching of posts based on hashtags or keywords included in the public posts by social media users. Before forwarding the content to the GE a pre-processing is done by filtering e.g. blocking of posts with unsuitable language or selection of specific content types, followed by the selection of specific posts that are then finally forwarded. In addition, also generic data sources can be included, for example from a show-specific smartphone app. This also enables interaction with viewers not using social media. In the tight coupling configuration, the operator can also select the graphics template for generating the broadcast overlay thus allowing for a single operator.

Graphics Engine und Tracking Engine

The Graphics Engine (GE) is responsible for generating the broadcast overlay and therefore the representation of the posts selected in the SME in a design suitable for the TV screen. It takes care of the correct perspective positioning within the scene captured by the camera and the mixing of the overlay with the camera signal before playing out the combined signal to the viewers via the existing broadcast infrastructure. Examples of how posts are shown in the broadcast can be seen in the following video.

In order to position the social media post correctly within the (virtual) studio environment, it is necessary to track the camera movement. This is achieved via camera tracking that derives the camera motion from the camera (video) signal itself without the need for additional hardware. This is done using the structure from motion concept that finds fixed objects in the scene and then uses these to estimate the relative motion of the camera with respect to them as shown in the following video.

Gesture Engine

The Gesture Engine allows the control of the platform directly by the moderator in the studio and lets her or him operate the entire system following an adequate pre-configuration. Gestures can be used, for example, to switch between different social media posts or fade-in/fade-out the social media overlay.

In a first step before controlling the system via gestures, it is necessary to recognise any gestures robustly. This was achieved by using Microsoft Kinect and Leap Motion for far-field and near-field recognition, respectively. Whereas the former is able to detect the position of limbs from a distance, the latter is able to detect individual finger position from close-up. Therefore, the solution based on Leap Motion is more suitable if the moderator is positioned behind a desk and the solution based on Microsoft Kinect is more suitable if the moderator is freely roaming within the studio. A typical setup for the Leap Motion solution is shown in the image below.

Controlling the system via gestures with Leap Motion

The raw data gained from these two devices then needs to be assigned to one of the gestures, which in turn will initiate a certain action i.e. switching to the next post. This was achieved by using a neural network with a deep learning architecture trained to associate finger, hand, and arm movements with a specific gesture. The used gestures and the different setups for the two devices are shown in the video below.

Online Distribution Engine

The last component is the cloud-based Online Distribution Engine (ODE) developed by IRT that provides an interactive interface between viewers and broadcaster. It consists of a scalable backend and a universal frontend application. The frontend application can be used with smartphones, tablets, PCs and HbbTV-capable TV sets alike, facilitating broadcasters not only to present additional social media content on the companion devices that didn’t fit into the broadcast overlay, but it also provides for a direct and convenient feedback channel on the TV set itself.

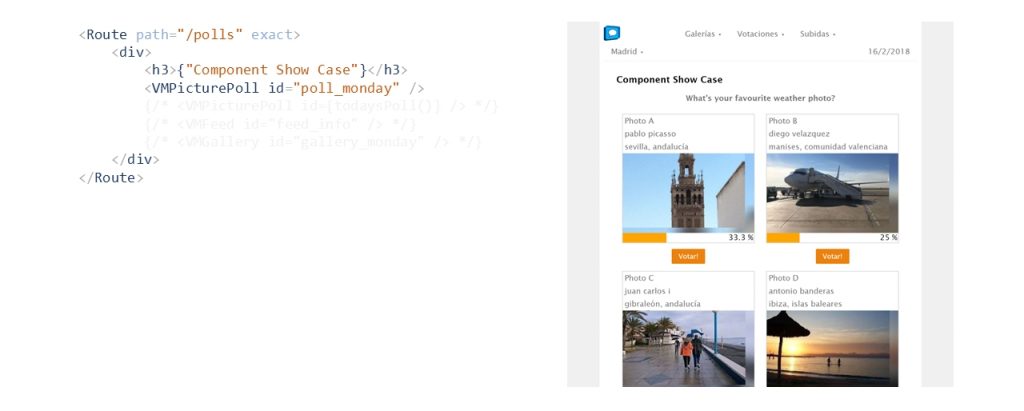

A major goal in the development of the ODE was to allow for an easy integration into (web) applications. This was achieved by abstracting the interface to the SME for the potential application developers so that no knowledge of the inner workings of the SME is necessary. The ODE provides therefore software components for the presentation of social media posts, both individually and in a carousel, polls, and (image) galleries. Each component can easily be integrated with a single line of code as shown below.

Integrating social media posts easily with the ODE

Show Case

In a joint showcase, IRT worked closely with the Spanish public broadcaster RTVE and other project partners in developing a second-screen app. The app is an interactive extension to a popular weather show on one of RTVE’s main TV channels. It lets viewers upload pictures of the current weather and then asks to vote in a poll for the best picture of each day as shown in the above image (right side). The complete workflow, including setting up the system with all components of the VisualMedia platform, is demonstrated in the video below.

Conclusion

The VisualMedia platform enables broadcasters to easily include and embed user-generated content and viewer feedback via social media channels into the broadcast content itself. The modularity of the architecture as described above allows the adaptation to existing workflows. The possible automation of many steps in the workflow has the clear potential for reducing operator efforts for bringing social media onto the TV screen.

The VisualMedia project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 687800.