A few months ago, IRT and BR started a collaboration work within the scope of the EU funded project ORPHEUS to produce content for the project pilots. The aim of this project is to implement a complete end-to-end chain, from capturing to consumption for object-based audio. This technology brings great possibilities and features such as interactivity, personalization, accessibility and immersion to the end user. See also our object-based demo page or enjoy the explanation radio feature below for further information.

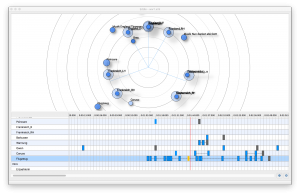

The used object-based production tools were developed by IRT and can be seen as proof-of-concept software to allow both researchers and sound engineers to gather experience with this new technology. Those tools contain an editor with graphical user interface to control the object positions but also other metadata, such as gain. But more importantly, the editor controls the signal processing under the hood, a so-called renderer which takes all audio signals and metadata to render or produce a signal suitable for the desired playback system (Stereo, 5.1, 22.2, binaural, etc.). This renderer and editor cooperation is real-time capable and can be connected to any DAW (Digital Audio Workstation).

The sound engineers from BR started the work with planning, capturing and mixing in their premises (monitoring mainly Stereo, 5.1 and binaural), equipped with our production tools, and visited us in our new 3D audio lab for the fine-tuning of the productions.

IRT engineers and BR colleagues in our 3D audio lab, listening to the object-based content in a 22.2 loudspeaker setup

In our lab, we were finally able to listen to the object-based mix in different loudspeaker configurations, from Stereo up to a full-blown rendering output on 40 loudspeakers and to compare the spatial resolution with that from the binaural version. It was very impressive. But also enlightening to experience where there are still things to be considered in spatializing sound events in order to maximise the perceptional effect.

In the meantime, two pieces of this collaboration work were rendered for several formats (Stereo, 5.1, binaural, etc.) and went eventually on air at B2. The broadcasted versions of these productions are still available on-demand here and here. The binaural versions of those pieces can be also listened to below along with a screencast of the editor and ProTools projects:

The next step will be to record and produce the English and French versions of the narrator to make excerpts of these pieces accessible for project pilots which are scheduled for August 2017.